Lately I have been working with XML extrusion bridges for scenery and while doing so I noticed some weird artifacts. Even though I out the end points under the ground, they sometimes still float above it. And I also noticed that two bridges don’t always connect even though you specify the same position and altitude for the connecting point.

So I decided to dive a little deeper and see if I can understand what is going on. And after examining a lot of bridges I have come to the conclusion that the scenery engine adds a weird elevation offset to all bridges. And this offset is equal to the average height of all points of the bridge. So the taller your bridge is, the more the bridge is raised and thus also the more your end points will float.

The good news is now that I understand the logic behind the offset it is easy to compensate for it, to make sure you get the bridge you expect. But I still don’t understand why it was implemented like this. I mean when you type an altitude in the XML file that’s what you expect to get, right?

SceneryDesign.org

SceneryDesign.org  I guess you already know the answer to the question posted in the title of this post. I’m afraid the answer was yes. This week two users (thanks to both of you) reported to me that scenProc is quite hungry for memory, especially when processing big files. Even 16 GB of RAM would easily be filled in certain cases.

I guess you already know the answer to the question posted in the title of this post. I’m afraid the answer was yes. This week two users (thanks to both of you) reported to me that scenProc is quite hungry for memory, especially when processing big files. Even 16 GB of RAM would easily be filled in certain cases.

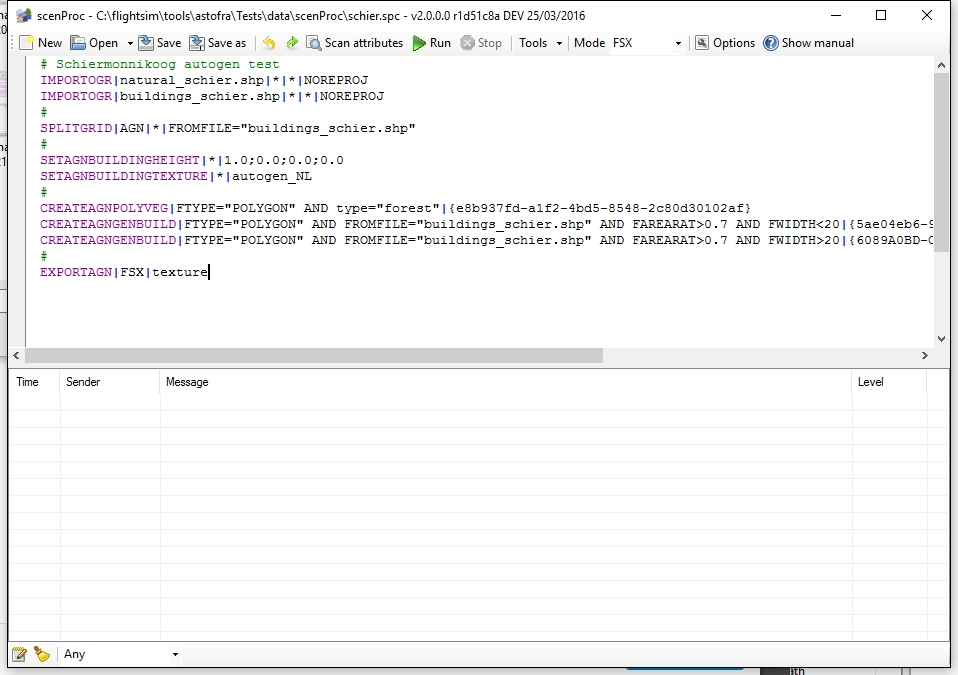

Just a early warning, the scenProc update with the new filter syntax is almost ready for release. I have just started to update the manual for all the changes, so hopefully in a few days I can put this feature in the development release.

Just a early warning, the scenProc update with the new filter syntax is almost ready for release. I have just started to update the manual for all the changes, so hopefully in a few days I can put this feature in the development release.